SmartVision Online 2.7-Performance Test Report

Contents

- DEFINITIONS AND ACRONYMS USED

- OBSERVATION AND RECOMMENDATION

- TEST ENVIRONMENT

- DATABASE & MESSAGE BROKER

- AUTOSCALABLE POD CONFIGURATION

- SMARTVISION APP STACK & AUTOSCALE CONFIGURATIONS

- SMARTVISION ONLINE VERSION

- OCR REPLICA COUNT

- PERFORMANCE TESTING – SOFTWARE USED

- TEST DATA AND TEST APPROACH

- PERFORMANCE TEST – RESULT SUMMARY

- DETAILED TEST RESULTS

- INFRASTRUCTURE RESOURCE USAGE

- TEST EXECUTION RAW DATA

DEFINITIONS AND ACRONYMS USED

|

Acronym / Item |

Expansion / Definition |

|

Metrics |

Measurements obtained from executing the performance tests and represented on a commonly understood scale. |

|

Throughput |

Number of requests handled per unit of time. Example number of documents extracted in an hour. |

|

Workload |

Stimulus applied to the system to simulate actual usage pattern regarding concurrency, data volumes, transaction mix. |

|

PWF |

Package Workflow |

|

POD |

Pod is a Kubernetes abstraction that represents a group of one or more application containers (such as Docker), and some shared resources for those containers. |

|

DU |

Document Understanding, a SmartOps proprietary AI based solution for SmartVision. |

|

SV |

SmartVision |

OBSERVATION AND RECOMMENDATION

-

Overall, the SmartVision online performance is improved in SV 2.7

-

Throughput is improved to 702 docs/hour compared to 600 in SV 2.6

-

Extraction time for 20 docs took only 130 seconds in 2.7, compared to 432 seconds in 2.6

-

Time to extract & populate 20 doc details in the browser UI for 2.7 took only 2.17 minutes, compared to 7.5 minutes in 2.6

-

2 docs out of 300 failed in 2.7 compared to 10 failures in 2.6

TEST ENVIRONMENT

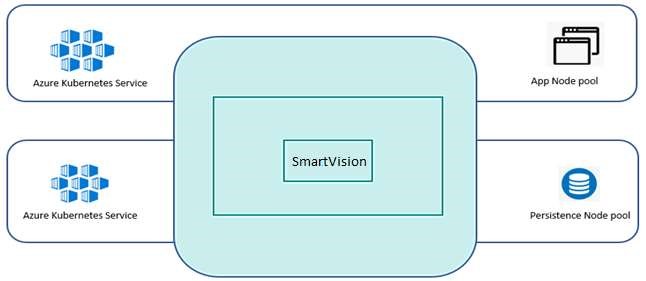

Performance test was conducted for SmartOps Kubernetes based Infrastructure hosted in Azure Cloud.

Test environment was deployed with Smart Extract pipeline and its related containers.

The below table summarizes the hardware Infrastructure along with Node count used for the Smart Extract infrastructure.

|

Kubernetes Nodes |

Hardware Infrastructure |

Node Count |

|

|

Min |

Max |

||

|

Application Node Pool Stacks du-liquibase, pwf-liquibase, keycloak-update, clones-upgrade, smartopskeycloak, du-migrations, du-data-migration, du-base, du-ai-base, dusmextract, smartvision-ui, smartvision-api, smartvision-trial-liquibase |

Azure D8sv3 CPU - 8 vCPU Core RAM: 32 GB |

4 |

11 |

|

Persistent Pool Components Mongo, MinIO, RabbitMQ, Elastic Search, Kibana |

Azure D4sv3 CPU - 4 vCPU Core RAM: 16 GB |

3 |

6 |

|

MySQL |

Azure Managed MYSQL General Purpose, 2 Core(s), 8GB RAM, 100 GB (Auto Grow Enabled) |

NA |

NA |

DATABASE & MESSAGE BROKER

Following are the details of replicas configured for Database & Message Broker in Kubernetes cluster. MySQL is deployed as a Managed service at Azure.

|

Stack Name |

Container Name |

No of instances |

|

|

Database |

mongo |

3 |

|

|

minio |

2 |

||

|

elasticsearch |

3 |

||

|

mysql |

Azure Managed Service |

||

|

Message Broker |

rabbitmq |

3 |

|

AUTOSCALABLE POD CONFIGURATION

Following are the CPU threshold limits, Replicas (Minimum & Maximum) configured for each component identified for Autoscaling in Kubernetes cluster for SmartVision.

|

Container name |

CPU Threshold |

Min PODS |

Max PODS |

|

|

du-pipeline |

80% |

2 |

2 |

|

|

du-rest |

80% |

2 |

4 |

|

SMARTVISION APP STACK & AUTOSCALE CONFIGURATIONS

The whole list of SV online application stack names is in the table below.

|

Stack Name |

|

|

proxy-sql |

pwf-liquibase |

|

mongo |

keycloak-update |

|

minio-app-filestore |

clones-upgrade |

|

minio-model-store |

smartops-keycloak |

|

invex-model-loader |

du-migrations |

|

rabbitmq |

du-data-migration |

|

elasticsearch |

du-base |

|

kibana |

du-ai-base |

|

mysql-init |

du-smextract |

|

phpmyadmin |

smartvision-ui |

|

du-liquibase |

smartvision-api |

|

backup-cron-job |

smartvision-trial-liquibase |

The below table provides container details of SmartVision 2.7 application stack.

As part of ensuring high availability of non-scalable components to avoid failures of any components during document processing, 2 instances of each components are by default available in the Kubernetes cluster.

|

Stack Name |

Container Name |

Auto Scaling |

Scaling Criteria |

|

|

du-smextract |

du-smart-extract-foi-preprocess |

|

|

|

|

du-smart-extract-foi-predict |

|

|

||

|

du-se-rebound-rest |

|

|

||

|

du-searchable-pdf |

|

|

||

|

du-smart-extract-foi-postprocess |

|

|

||

|

du-smart-extract-table-preprocess |

|

|

||

|

du-smart-extract-table-predict |

|

|

||

|

du-smart-extract-table-postprocess |

|

|

||

|

du-base |

du-pipeline |

Y |

80% CPU |

|

|

du-page-split |

|

|

||

|

du-rest |

Y |

80% CPU |

||

|

du-scheduler |

|

|

||

|

du-invoice-image-ocr |

Y |

80% CPU |

||

|

du-tilt-correct |

|

|

||

|

du-resolution-correction |

|

|

||

|

du-ai-base |

du-language-classifier |

|

|

|

|

du-handwritten-classifier |

|

|

||

|

du-ocr-component |

|

|

||

|

du-zero-shot-classifier |

|

|

||

|

du-keyword-classifier |

|

|

||

|

du-document-quality-classifier |

|

|

||

|

du-invoice-split |

|

|

||

|

du-no-extraction-page-classifier |

|

|

||

|

du-barcode-extractor |

|

|

||

|

du-relevance-classifier |

|

|

||

|

du-logo-extractor |

|

|

||

|

du-vespa-stk |

du-core-nlp du-vespa-foi-preprocess |

|

|

|

|

du-vespa-foi-predict |

|

|

||

|

du-vespa-foi-postprocess |

|

|

||

|

du-vespa-table-preprocess |

|

|

||

|

du-vespa-table-predict |

|

|

||

|

du-vespa-table-postprocess |

|

|

||

|

smartvision-ui |

smartvisionui |

|

|

|

SMARTVISION ONLINE VERSION

|

Application build |

|||

|

SmartVision Online 2.7.2 |

|||

|

2.5. |

|

OCR REPLICA COUNT

All the tests presented in this report are executed with 2 POD replicas of du-ocr-component.

|

POD Replica Name |

Count |

|

|

du-ocr-component |

2 |

|

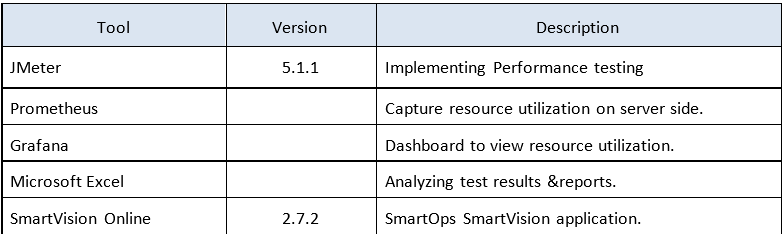

PERFORMANCE TESTING – SOFTWARE USED

Following Tools used as part of performance testing

TEST DATA AND TEST APPROACH

Two separate tests are identified in this release. i.e., 1 user Test and 30 concurrent user Tests. Test data used and the approach for each test is detailed in each section below.

SINGLE USER TEST

Conduct a single user test: User login to the application and upload 20 docs in the beginning of the test. Monitor the time taken for each doc extraction and the time taken to populate data in the UI.

30 CONCURRENT USERS TEST

Identified 300 single page invoice PDF doc customer sample invoices are used in this test.

Create 30 users in the system. JMeter script through API calls will upload the doc files during the test. This will create 30 concurrent user loads in the system with 10 docs uploaded for each user. Run the test until completion of all the 300 files processing. Performance metrics are captured, like the time taken to process each file and the time taken to populate doc details in the UI screen.

PERFORMANCE TEST – RESULT SUMMARY

SINGLE USER TEST

Time taken to process 20 docs by single user is 2.16 minutes

|

|

Start Time |

End Time |

Dur secs |

Throughput |

Env |

Pass/Fail |

|

10:51:10 |

10:53:20 |

130 |

554 |

PT - azure |

20/0 |

Comparison between 2.6 and 2.7. Time taken to process 20 docs for a single user is improved by 5.04 minutes in 2.7 compared to 2.6

|

Test

|

Extraction Time (Minutes) |

||

|

SV 2.6 |

SV 2.7 |

||

|

Single user/20 docs |

7.2 |

2.16 |

|

30 CONCURRENT USERS TEST (300 DOCS)

Time taken to process 300 invoices by 30 concurrent users is 25.48 minutes.

|

Start Time |

End Time |

Dur secs |

Throughput |

Env |

Pass/Fail |

|

4:41:08 |

5:06:37 |

1529 |

702 |

PT - azure |

298/2 |

Comparison between 2.6 and 2.7. Time taken to process 300 docs for 30 concurrent users is improved by 39.37 minutes in 2.7 compared to 2.6

|

Test

|

Extr |

action Time (Minutes) |

|

SV 2.6 |

SV 2.7 |

|

|

300 concurrent users/300 docs |

64.85 |

25.48 |

DETAILED TEST RESULTS

SINGLE USER TEST

DOC EXTRACTION TIME & UI REFRESH TIME

|

Doc # |

Start Time |

End Time |

Extraction Time (secs) |

UI refresh Time (secs) |

|

1 |

10:51:10 |

10:51:48 |

38 |

46 |

|

2 |

10:51:10 |

10:51:48 |

38 |

46 |

|

3 |

10:51:10 |

10:51:57 |

47 |

56 |

|

4 |

10:51:11 |

10:51:59 |

48 |

56 |

|

5 |

10:51:11 |

10:52:10 |

59 |

66 |

|

6 |

10:51:11 |

10:52:09 |

58 |

66 |

|

7 |

10:51:11 |

10:52:08 |

57 |

66 |

|

8 |

10:51:12 |

10:52:20 |

68 |

97 |

|

9 |

10:51:12 |

10:52:28 |

76 |

97 |

|

10 |

10:51:12 |

10:52:28 |

76 |

97 |

|

11 |

10:51:12 |

10:52:19 |

67 |

97 |

|

12 |

10:51:13 |

10:52:59 |

106 |

113 |

|

13 |

10:51:13 |

10:52:39 |

86 |

97 |

|

14 |

10:51:13 |

10:52:40 |

87 |

97 |

|

15 |

10:51:14 |

10:53:09 |

115 |

127 |

|

16 |

10:51:14 |

10:53:10 |

116 |

127 |

|

17 |

10:51:14 |

10:52:49 |

95 |

102 |

|

18 |

10:51:15 |

10:53:20 |

125 |

137 |

|

19 |

10:51:15 |

10:53:19 |

124 |

137 |

|

20 |

10:51:16 |

10:53:20 |

124 |

137 |

Performance is improved in SV 2.7 for doc extraction time as well as the time required to populate the details in end user browser UI.

COMPARISON BEWTWEEN 2.7 AND 2.6 VERSIONS

|

Doc # |

SV 2.7 |

SV 2.6 |

|||||

|

Extraction Time (secs) |

UI refresh Time (secs) |

Extraction Time (secs) |

UI refresh Time (secs) |

||||

|

1 |

38 |

46 |

78 |

90 |

|||

|

2 |

38 |

46 |

118 |

120 |

|||

|

3 |

47 |

56 |

67 |

120 |

|||

|

4 |

48 |

56 |

97 |

120 |

|||

|

5 |

59 |

66 |

127 |

150 |

|||

|

6 |

58 |

66 |

96 |

150 |

|||

|

7 |

57 |

66 |

168 |

150 |

|||

|

8 |

68 |

97 |

136 |

210 |

|||

|

9 |

76 |

97 |

167 |

210 |

|||

|

10 |

76 |

97 |

187 |

210 |

|||

|

11 |

67 |

97 |

236 |

240 |

|||

|

12 |

106 |

113 |

236 |

270 |

|||

|

13 |

86 |

97 |

206 |

270 |

|||

|

14 |

87 |

97 |

296 |

300 |

|||

|

15 |

115 |

127 |

276 |

300 |

|||

|

16 |

116 |

127 |

276 |

330 |

|||

|

17 |

95 |

102 |

320 |

360 |

|||

|

18 |

125 |

137 |

345 |

390 |

|||

|

19 |

124 |

137 |

384 |

420 |

|||

|

20 |

124 |

137 |

314 |

450 |

|||

|

6.2. |

30 CONCURRENT USERS TEST / 300 DOCS |

||||||

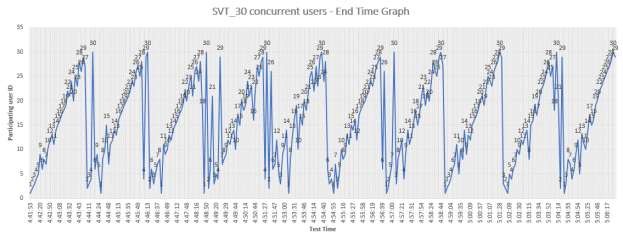

30 CONCURRENT USERS TEST / 300 DOCS

DOC EXTRACTION TIME & UI REFRESH TIME

While the system has 30 concurrent users, uploaded 10 docs each user. Individual doc extraction time and UI refresh time details for the first end User.

|

Doc # |

Start Time |

End Time |

Extraction Time (secs) |

UI refresh time(secs) |

|

1 |

4:41:08 |

4:41:53 |

45 |

52 |

|

2 |

4:42:24 |

4:44:35 |

131 |

152 |

|

3 |

4:43:15 |

4:46:38 |

203 |

211 |

|

4 |

4:43:56 |

4:48:50 |

294 |

297 |

|

5 |

4:44:48 |

4:53:04 |

496 |

503 |

|

6 |

4:45:39 |

4:54:55 |

556 |

562 |

|

7 |

4:46:26 |

4:56:50 |

624 |

631 |

|

8 |

4:47:12 |

4:58:55 |

703 |

709 |

|

9 |

4:48:01 |

5:02:00 |

839 |

857 |

|

10 |

4:48:49 |

5:04:24 |

935 |

946 |

30 CONCURRENT USERS END TIME GRAPH

RABBITMQ MESSAGE QUEUE LENGTH

INFRASTRUCTURE RESOURCE USAGE

As part of ensuring high availability, 2 PODS of each components are deployed in Kubernetes. The data represented below are the maximum CPU cores & memory utilization of both the PODs during the test.

|

|

|

CPU |

Memory |

||

|

Stack Name |

Container Name |

Limit Set |

Max Used |

Limit Set |

Max Used |

|

du-smextract |

du-smart-extract-foi-preprocess |

0.75 |

0.04 |

954 MB |

109.68 MB |

|

du-smart-extract-foi-predict |

4 |

2.56 |

4.657 GB |

2.31 GB |

|

|

du-se-rebound-rest |

2 |

0.001 |

1.86 GB |

96 MB |

|

|

du-searchable-pdf |

1 |

0.03 |

954 MB |

86 MB |

|

|

du-smart-extract-foi-postprocess |

0.75 |

0.03 |

954 MB |

117 MB |

|

|

du-smart-extract-table-preprocess |

0.75 |

0.23 |

954 MB |

331 MB |

|

|

du-smart-extract-table-predict |

3 |

2.25 |

3.725 GB |

1.857 GB |

|

|

du-smart-extract-table-postprocess |

2 |

0.135 |

1.863 GB |

0.583 GB |

|

|

du-base |

du-pipeline |

0.5 |

0.24 |

1.4 GB |

686 MB |

|

du-page-split |

0.5 |

0.04 |

954 MB |

137 MB |

|

|

du-rest |

0.5 |

0.001 |

954 MB |

74 MB |

|

|

du-scheduler |

0.5 |

0.076 |

954 MB |

74 MB |

|

|

du-invoice-image-ocr |

1 |

0.023 |

1.9 GB |

118 MB |

|

|

du-tilt-correct |

1 |

0.04 |

1.4 GB |

107 MB |

|

|

du-ai-base |

du-ocr-component |

4 |

0.286 |

3.725 GB |

1.04 GB |

|

du-document-quality-classifier |

0.8 |

0.28 |

1.9 GB |

1.24 GB |

|

|

Database & Message Broker |

MongoDB |

0.5 |

0.36 |

7.45 GB |

261 MB |

|

Elastic Search |

1 |

0.7 |

6 GB |

4.74 GB |

|

|

Minio-model-store |

2 |

0.058 |

3.725 GB |

174 MB |

|

|

RabbitMQ |

1 |

0.554 |

2 GB |

295 MB |

|

TEST EXECUTION RAW DATA

Test execution raw data is available on the following SharePoint location – SV 2.7.2 Perf Test